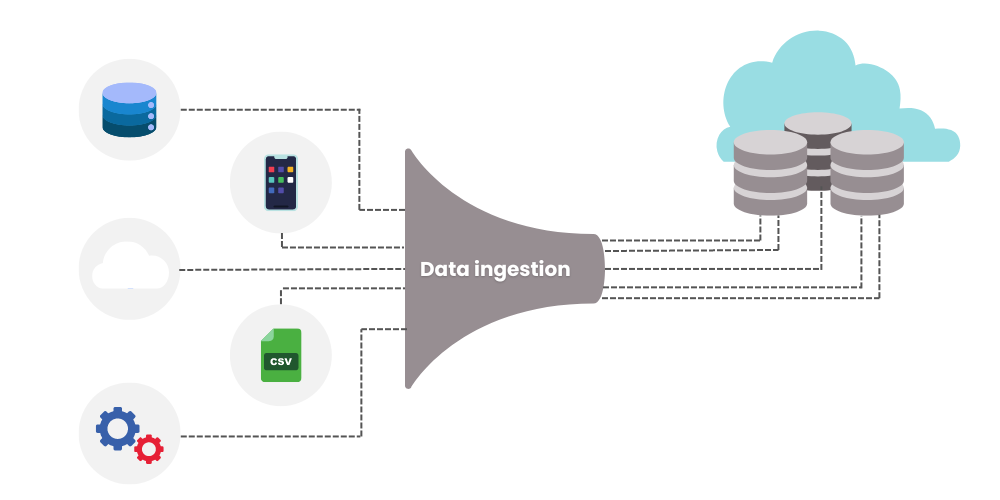

Data ingestion is a critical step within the process of data pipeline architecture. Therefore, in this blog we will explain what data ingestion is, look into the different types and the tools you can use to move your data onto the Google Cloud Platform.

What is Data Ingestion on GCP?

Data Ingestion is the process of collecting data from multiple sources and moving it to your cloud platform. This is the first step of the data transformation process and absolutely crucial to be done right before you can start any data analytics or machine learning projects, or simply to store your data more securely and accessibly.

Types of Data Ingestion

Data ingestion sounds straightforward, but there's an art to doing it well. Depending on your organisational needs, you can choose between batch processing and real-time processing.

Batch Processing

Batch processing accumulates data over time and the data ingestion tools process data in small batches at scheduled time intervals. Batch processing can also be triggered by certain conditions like incoming requests or changes in a system’s state.

This method is optimal for cost and resource management. It is efficient for organisations handling data in volumes, and working with non-time-sensitive data.

Real-time Processing

Real time processing happens when raw data is ingested by the tool in real time, facilitating dynamic responses to data inputs. In this case the data is not categorised and is ingested into the data base one record at a time.

This approach is crucial for applications requiring immediate insights, such as fraud detection systems or live user interaction analysis.

Each method has its perks, and Google Cloud has a suite of tools designed to handle any data challenge you throw at it. Let's dive into some of these tools and see how they can help you make the most out of your data.

Google Cloud Platform (GCP) Data Ingestion Tools

Dataflow

First up, Google Cloud Dataflow provides a robust solution for both real-time and batch data processing, ideal for analysing customer behaviour or streamlining log analytics in real time. It's a fully managed service that simplifies the complexities of stream and batch data processing.

Imagine you're trying to analyse customer behaviour on your website in real time; Dataflow can help you process that data as it comes in, giving you instant insights. It's like having a super-efficient assembly line that sorts, analyses, and packages your data without you having to lift a finger.

Pub/Sub

Next, we have Google Cloud Pub/Sub, which stands for Publish/Subscribe. This service is all about real-time communication in your systems. It lets you send and receive messages between independent applications.

Think of it as the town crier of your data ecosystem, shouting out updates that any part of your application can listen to and react to in real-time. It's fantastic for event-driven architectures and can scale massively without breaking a sweat.

Dataproc

For those dealing with big data processing using open-source tools like Hadoop and Spark, Google Cloud Dataproc is your best friend. It's a managed service that lets you run these big data frameworks efficiently, scaling quickly and reducing your processing times dramatically.

Dataproc is like having an expert team that sets up, manages, and scales your big data operations so you can focus on extracting value from your data without worrying about the underlying infrastructure.

Cloud Functions

Google Cloud Functions is perfect for those who want to run backend code in response to events triggered by Google Cloud services or HTTP requests without managing servers. It's incredibly flexible, allowing you to automatically process images, update data, and even respond to online transactions as they happen. It's like having a little robot that does exactly what you need right when you need it without having to build and maintain a whole factory.

Cloud Composer

Google Cloud Composer is a fully managed workflow orchestration service built on Apache Airflow. It's designed for creating, scheduling, and monitoring complex workflows across your cloud environment.

Whether you're integrating data from various sources, managing data pipelines, or automating data processing tasks, Cloud Composer coordinates everything smoothly. Think of it as the conductor of an orchestra, making sure every section comes in at the right time to create a beautiful symphony of data.

Application Integration Services

Finally, we have Google Cloud's Application Integration Services, which are all about making sure your applications can talk to each other and to other services seamlessly. This includes event-driven functions, API management, and app connectors. It's like the social butterfly of your cloud architecture, ensuring everyone's connected and communicating effectively so that your data flows smoothly from one service to another.

In the world of data, getting your ingestion strategy right sets the stage for everything you want to do next. Whether you're processing big data with Dataproc, reacting to events in real-time with Pub/Sub, automating workflows with Cloud Composer, or leveraging any of Google Cloud's other powerful tools, there's a solution tailored to meet your needs. The key is to understand your data, know your objectives, and pick the tools that align with your goals.

And remember, you're not alone on this journey. As a Google Cloud partner, we're here to guide you through choosing and implementing the right data ingestion strategies for your business. Let's unlock the potential of your data together!