Before we start; this is a practical guide, not a comprehensive guide. We want to write that too, but we'll keep things nice and lean for this article's purposes so that you can get started as fast as possible.

Hello and welcome 👋

This blog post covers some of the differences between dbt and Dataform and some watchpoints you should keep in mind when converting from one platform to the other. For a more comprehensive comparison between the two tools, you can also have a look at our previous blog:

Dataform vs DBT, differences and migration paths

⚠️Warning - This gets a little technical in some places. Anyone that knows how to write SQL and use dbt should be able to follow along, though.

Painting the picture 🖼️

We like to show by example. It's all well and good for us to tell you how to migrate from dbt to Dataform, but we'd prefer to take you on the journey.

Imagine you're a business, don't have huge data modelling capacity, and you want to integrate your financial data into BigQuery. You're running Xero, and you've decided that you want to bring that data into your warehouse because the standard Xero reporting isn't cutting it, or you may want to merge it with other data sources.

You use Fivetran to bring the data out, but shucks, the schema is an absolute nightmare! Journals, credit notes, the lot - how am I gonna make ANY sense of this? Fortunately enough, Fivetran provides us with a neat little data model you can run on dbt to make the data much more usable.

But you're on Google; you run BigQuery. You want to use Dataform because of the super neat BigQuery integration, and it's free. There are more reasons, but we're keeping this short.

For readers that might not know, Xero is a cloud-based accounting software platform that allows businesses to manage their finances and accounting operations.

Fivetran is a cloud-based data integration platform that automates data extraction from various sources and loads it into a destination data warehouse, such as BigQuery

With Fivetran, we can easily automate the process of loading data from Xero into BigQuery, Google's enterprise data warehouse. Once the data is loaded into BigQuery, we can use Dataform to transform and analyse the data.

So, how do we use this dbt model on Dataform? Read on!

Modelling data with Dataform 🗃️

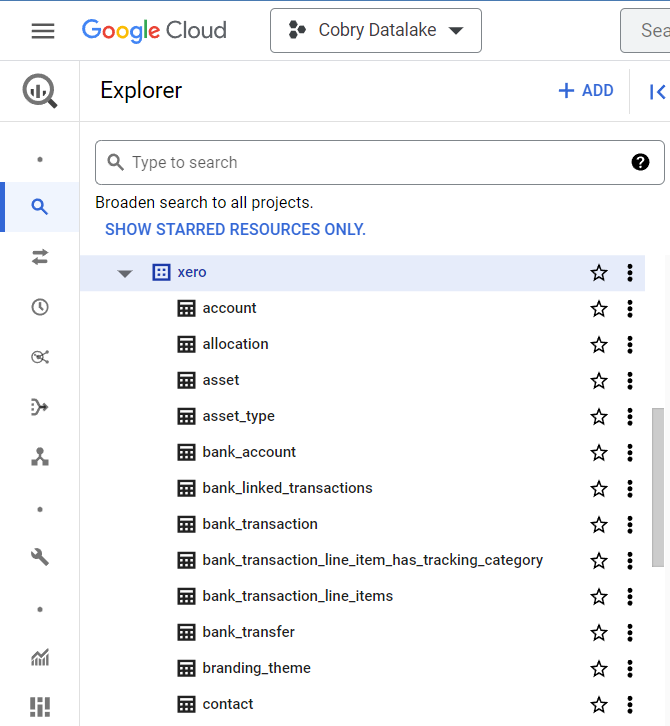

⚠️We assume you could use Fivetran to export your Xero data into a BigQuery data set. Once the syncing is complete, it should look something like this:

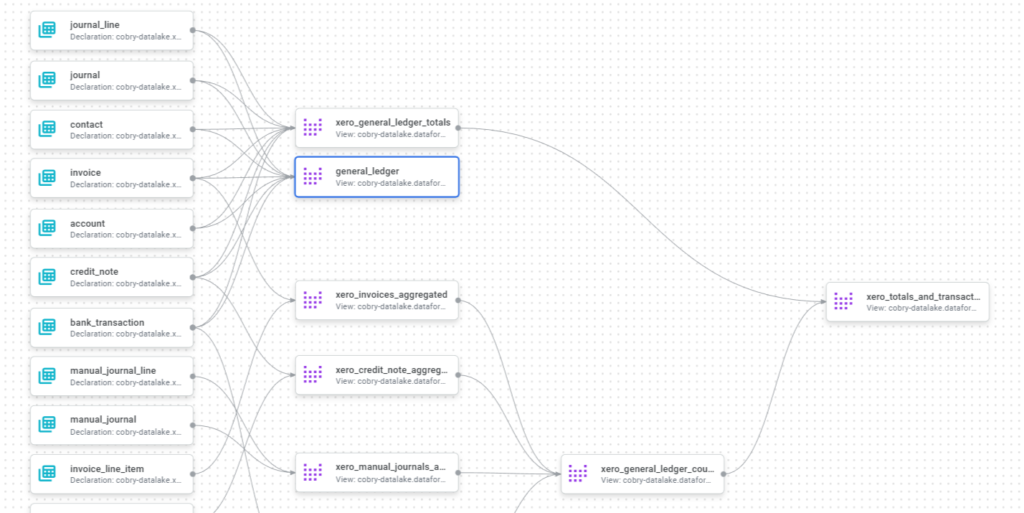

The data set contains several tables, each with valuable data that can be queried or used in a dashboard. However, this isn't too usable; this needs to be modelled first before it's used by your average users. In our use case, we want to create a General Ledger that contains all types of transactions, such as invoices, bank transactions, manual journals, and credit notes. This way, we can easily explore the data.

This is where Dataform comes into play. We can use it to create a data model for a General Ledger.

🃏 We have one more card up our sleeve. Remember I mentioned that neat little model? Instead of writing the data model from scratch, we'll reuse existing open-source code by converting code from the Xero Transformation dbt Package to be compatible with Dataform.

Before we get into the steps, a note:

Since our goal was to create a General Ledger as fast as possible, we skipped some of the staging steps from the dbt Package. By following these steps, you should be able to generate a working General Ledger, but you might need to tweak certain things depending on your requirements.

So, let's get started; these are the steps we had to take:

Step 1. Set up the landing zone (by creating folders and configuring the dataform.json file)

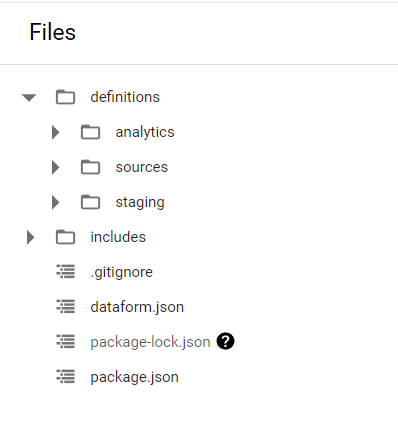

Folder structure! You should probably check out the best practices documentation on the Dataform website, but this is what it should look like. Nothing crazy, but it does differ from dbt.

The tl;dr:

- /sources - where you define your SQL transformations. We'll be working here, mostly.

- /reporting or /analytics - where you define datasets that represent entities that will be consumed by downstream users (BI Tools, Reports etc.)

- /staging - where you define intermediary datasets

- /includes - where you put reusable javascript or SQL snippets that can be included in your models

This is an example of best practice for setting the folders in a Dataform project

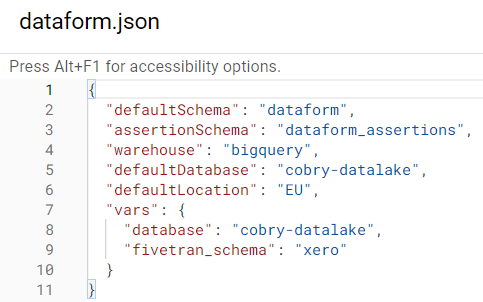

This is also how the dataform.json file looks like:

The values you use here will depend on where you want your resulting tables and views to reside in your warehouse.

⚠️ We defined two variables we will reuse throughout the Dataform code in the vars object. Ensure these match the database and schema you used when setting up the Fivetran connector.

Step 2. Create sources files

Dive into your sources folder! Make these files:

- journal

- journal_line

- account

- invoice

- bank_transaction

- credit_note

- contact

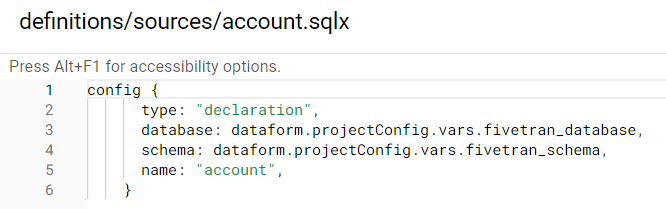

They should look a little like this. It's how you connect to your external sources in Dataform. It's more or less a file that reads from your Dataform config.

An example source file for the account table

You can notice that for the database and schema, we have referenced the variables we defined in Step 1.

Step 3. Create the General Ledger file

As we mentioned previously, we will not write this file from scratch but rather repurpose the code for the General Ledger model from the Xero Transformation dbt Package.

Thus, we created a new file under the analytics folder:

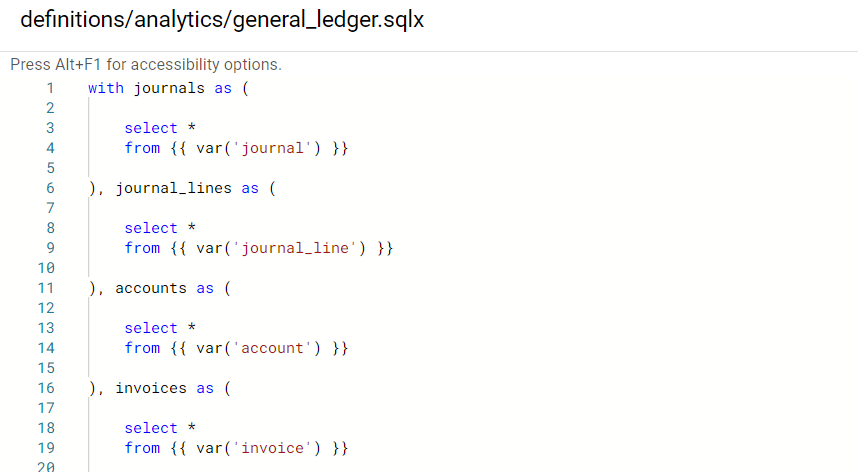

The first couple of rows from the general_ledger file

Do not get worried at this point if you notice a red exclamation mark. This is caused by the errors in syntax caused by importing a dbt model directly into Dataform. We will take care of that in the next step.

Also, remember to add the config flag at the top of your file like this:

config { type: "view"}In the end, this will result in a view in BigQuery instead of a table, but for our use case, this is ideal since we will not be configuring any automatic scheduling in this tutorial. As a general rule, use views by default (they're cheap and quick), and use tables for datasets you expect to have downstream users for performance reasons

Step 4. Update the General Ledger code for Dataform

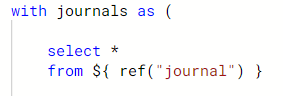

The first thing we will do is to replace the {{ var() }} functions for each source file with ${ ref() } functions like this:

Original dbt code

Updated Dataform code

Since we aimed to get the General Ledger up and running as fast as possible, we took some liberties. One of them was to remove all the conditional clauses found in the dbt model.

For example, the following code:

{% if var('xero__using_bank_transaction', True) %}

), bank_transactions as (

select *

from {{ var('bank_transaction') }}

{% endif %}

{% if var('xero__using_credit_note', True) %}

), credit_notes as (

select *

from {{ var('credit_note') }}

{% endif %}

),

Was replaced with:

bank_transactions as (

select *

from ${ref("bank_transaction")}

)

, credit_notes as (

select *

from ${ref("credit_note")}

),

We did this because, for our situation, both boolean variables set in the debt model (xero__using_bank_transaction and xero__using_credit_note) should be true.

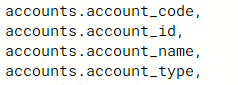

Lastly, because we skipped the staging part of the dbt model, some of the fields we were interested in had different names than the ones the model was expecting. We fixed that as well like this:

Step 5. Execute code and explore the data

With everything set in place, we can execute the code and, after a couple of seconds, receive a message that our General Ledger is waiting in BigQuery for us. ✅

Now that the General Ledger is in BigQuery, we can use it to gain insights into our financial data. To quickly do this, we can export the data to Google Sheets using Connected Sheets or, even better, create a dashboard with Looker 🎉

Bonus; you get a neat little graph

To summarise, here are some things you should look out for when converting from dbt to Dataform:

Folder Structure

One difference between dbt and Dataform is the folder structure. In dbt, projects are structured around “models” - SQL files that define a transformation. In Dataform, projects are structured around “tables” - SQLX files that define a table.

References

dbt’s {{ ref() }} and {{ source() }} calls need to be replaced with ${ ref() } within Dataform.

Sources

You need to define your sources to represent your data inputs.

Variables

In dbt, you might use the {{ var() }} function to reference variables from your dbt_project.yml file into models during compilation. By comparison, in Dataform, you can access variables defined in your dataform.json file by using dot notation:

dataform.projectConfig.vars.name_of_variableMacros and functions

While in dbt, you might define a macro to help you save time with a commonly used operation you need to make, in Dataform, you will have to convert that into a Javascript function.

Conclusion

Thank you for reading this practical guide on converting from dbt to Dataform. We hope that you found it helpful and informative. If you have any questions or want to learn more about leveraging Dataform for your data modelling needs, don't hesitate to contact us.

If you're reading this, you may want some help migrating your dbt models to Dataform. We can do that for you! If you'd like us to do it, then drop your email below and we'll reach out to you